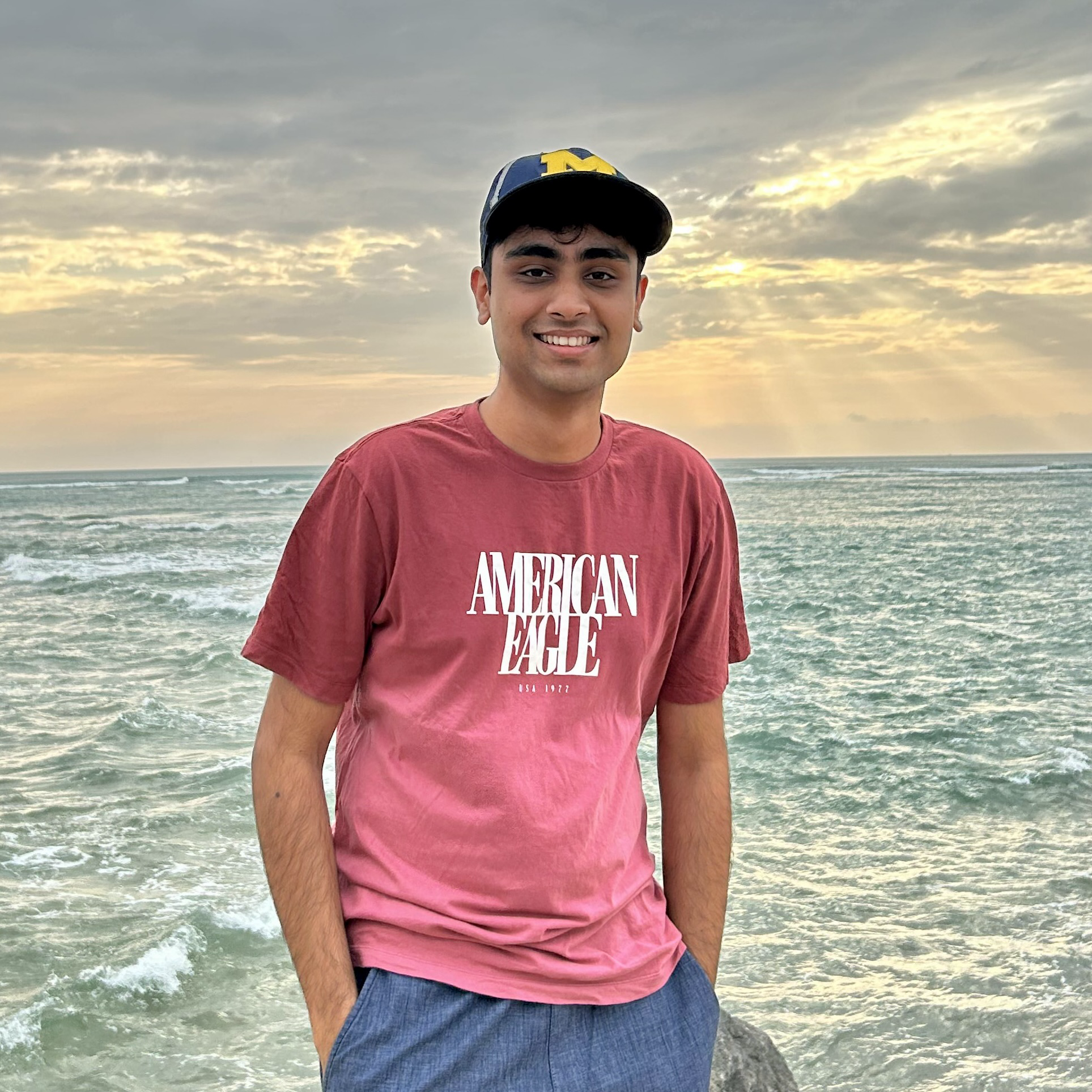

Pranav Gupta

Multimodal AI • Vision+Language • Generative Models

Master's student (incoming) in CSE at the University of Michigan, Ann Arbor. I build multimodal systems and study natural-language supervision for vision tasks.

Multimodal AI • Vision+Language • Generative Models

Master's student (incoming) in CSE at the University of Michigan, Ann Arbor. I build multimodal systems and study natural-language supervision for vision tasks.

I will be starting my Master's in CSE at the University of Michigan, Ann Arbor. My research interests revolve around Multimodal AI and natural language supervision in vision tasks. I'm currently a Research Intern at Stanford's PanLab working on multimodal AI in neuroimaging, and I also intern at DREAM:Lab (IISc) on deep learning for edge accelerators.

Past stints include Samsung, Upthrust, and AarogyaAI on research and data science projects.

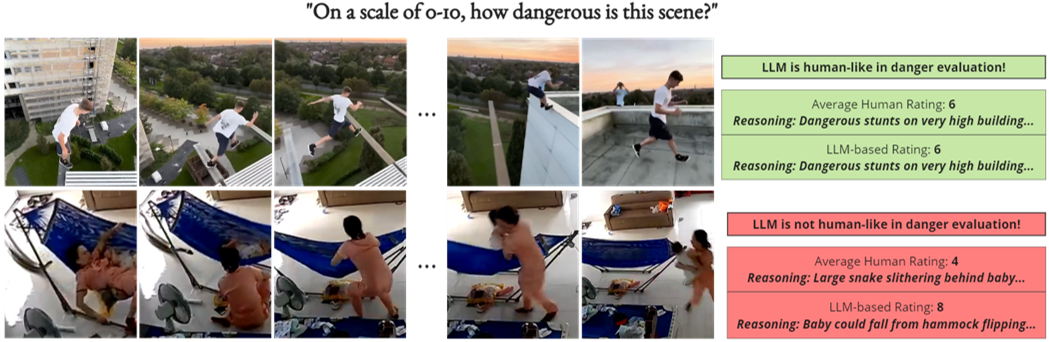

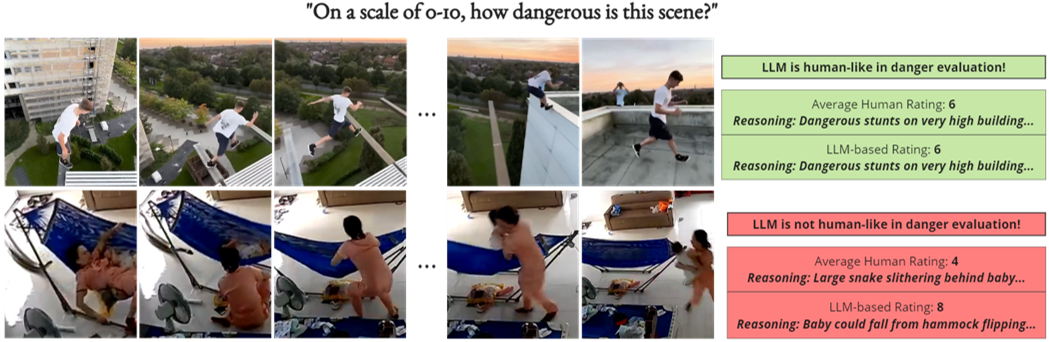

ICVGIP 2024

Human-like danger assessment from videos with LLM-based reasoning and failures on subtle cues.

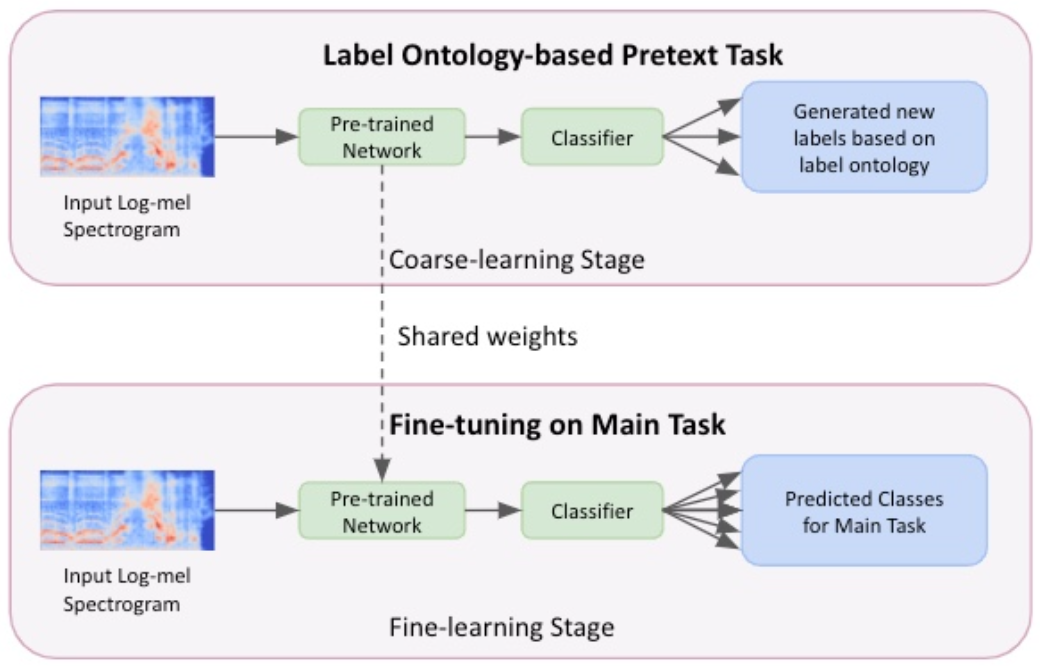

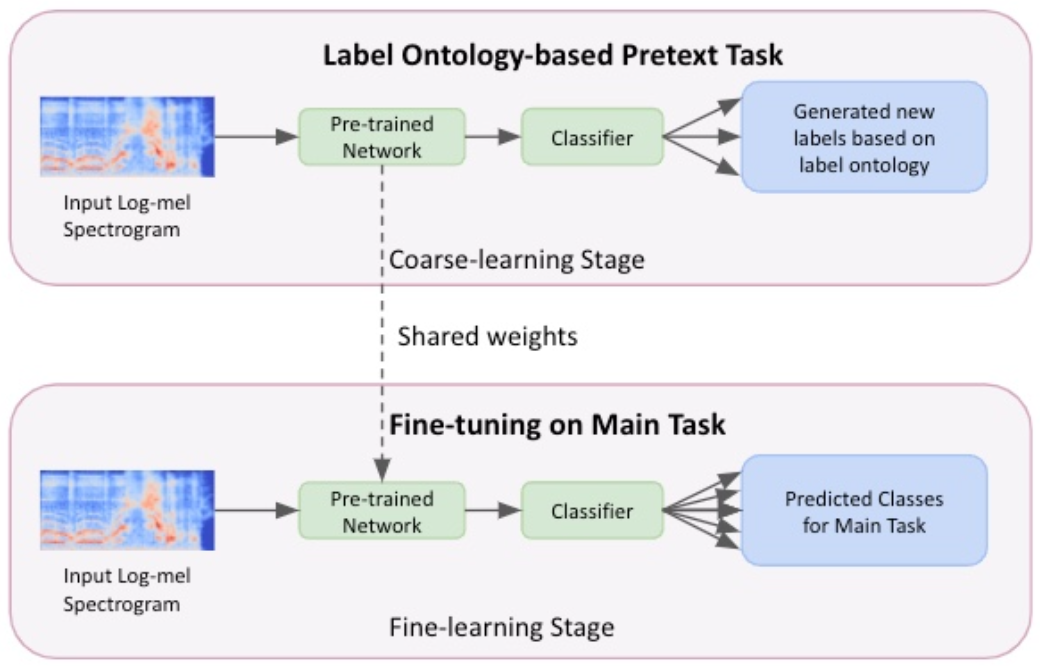

IEEE CONECCT 2024

Label-ontology guided pretext tasks improve audio classification via semi-supervised learning.

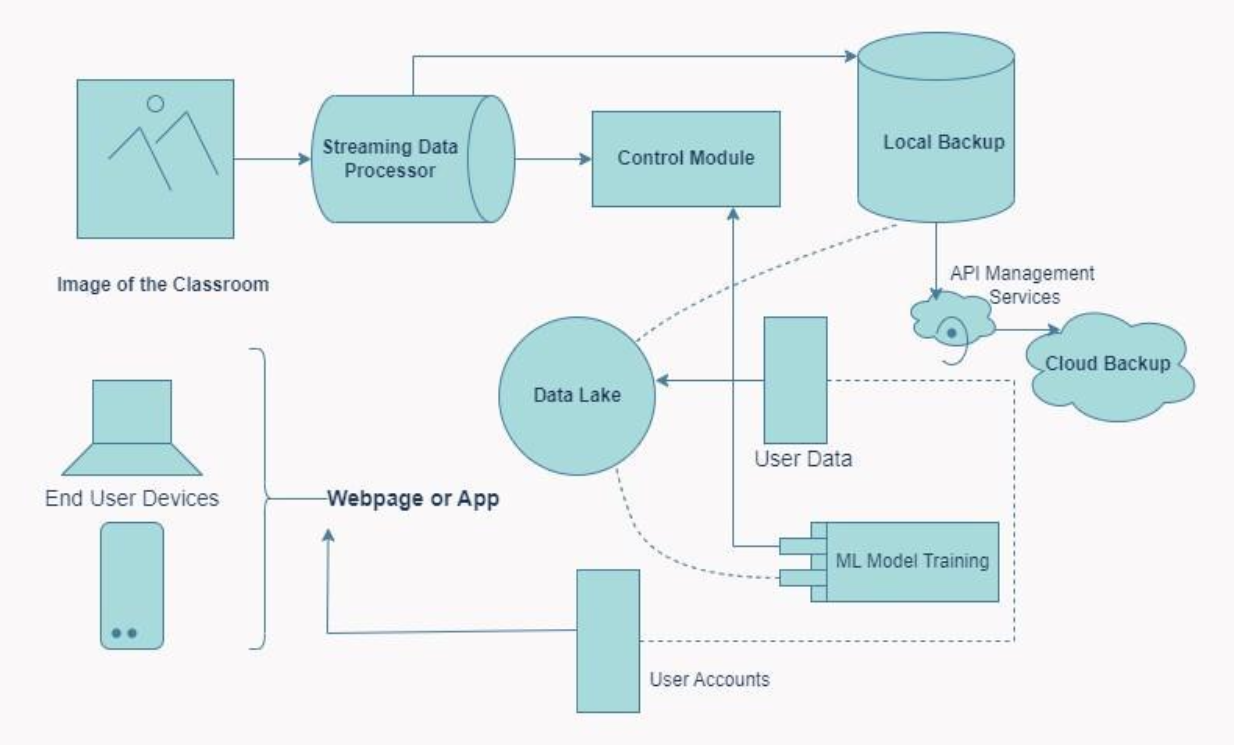

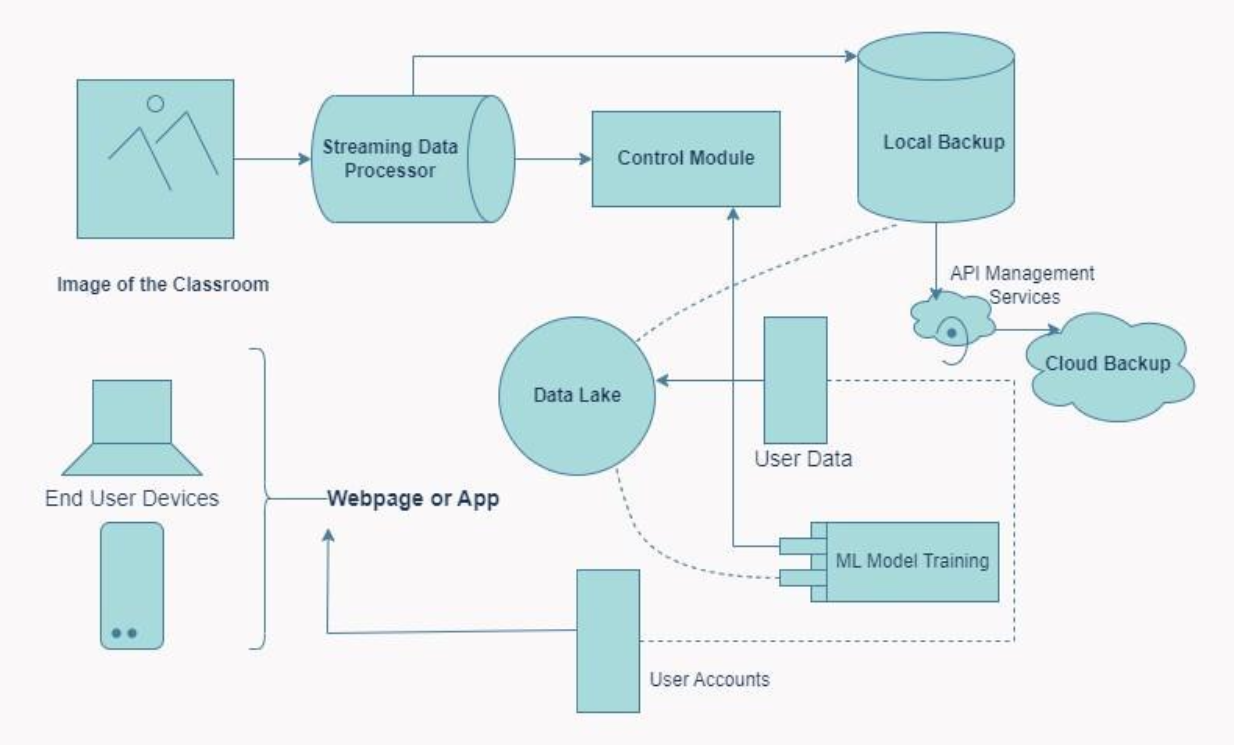

AAIMB 2023

A camera-first pipeline for attendance with privacy-preserving data flows.

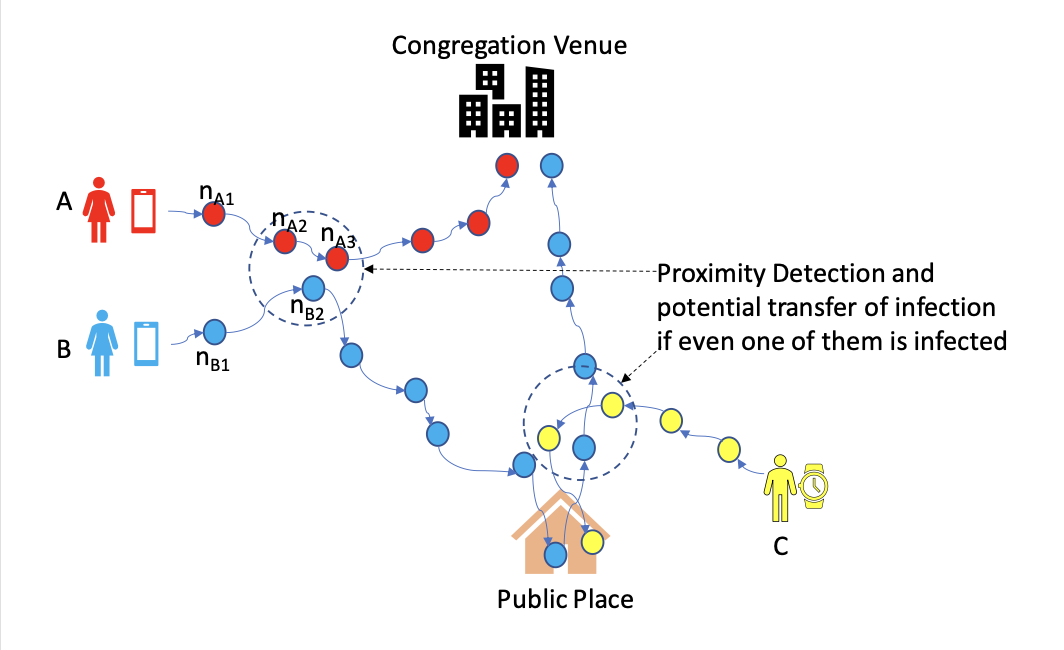

IEEE CCEM 2020

Graph-based proximity modeling to estimate contagion transfer risk in gatherings.

Predict the next best word by parsing a screenshot of a partially-filled Wordle.

Query your search history content semantically to jump to the right page.

Parse a bill image and auto-create Splitwise entries end-to-end.

Evolve CNN kernels and pooling to optimize MNIST classification.

One-vs-all and one-vs-one SVMs using FaceNet embeddings on LFW.

Clean PyTorch/TensorFlow implementations for core vision & audio papers.